Finally, it’s time to talk about what we have developed and what problems we have overcome in the last year, being another significant step towards SketchAR users feeling the freedom of creativity, without the limits of conventions.

There is a hunger for mobile apps offering virtual content, regardless of the limitations of current technology. Because computer vision is a sphere in which software is closely related to hardware, unfortunately, most of the concepts of AR-products will always be limited by delays on the technical side.

Small teams rarely solve fundamental problems, but we can find a method that can simplify the task.

Why reinvent the wheel and why hasn’t anyone tried to do so before us?

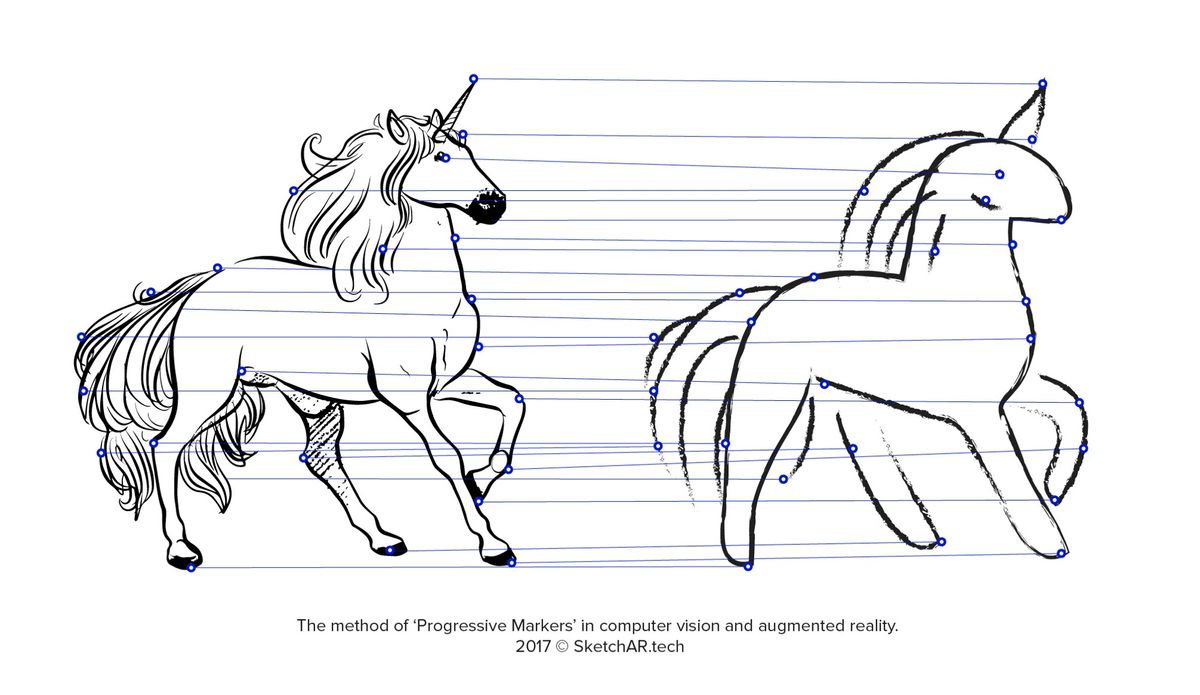

Most third-party libraries and platforms are based on pre-installed markers to fix a virtual object in its position in the real world. In our case, the hand drawing the picture blocked these markers so we had to develop our own method.

In addition to this, ARKit and ARCore are not based on markers (except in the environment), but the accuracy of fixing virtual objects would be better if some pre-installed markers held the virtual object in place. The current method does include detection and tracking of key points to guide the user but it is not possible yet for the user to set their own reference markers. The second problem with ARKit and ARCore is not being able to move a virtual object in line with the real world. For example, after placing a virtual object on a physical surface we can’t move the combined virtual and physical surface as one — as if glued — because the virtual image remains in the same location, and does not follow the surface.

As has been said, SketchAR works with white paper, which is a “killer” for most computer vision algorithms. However, our algorithms are specially optimized for such conditions. They are stable and accurate enough to bind AR-content to the paper. They continue to work even after the failure of ARKit tracking. Speed is also important. We have moved most calculations to GPU, which allows SketchAR to work in real time on iPhones, starting with the 5SE model.

The ‘Progressive markers’ method.

This means a line drawn by the artist turns into an anchor and adds stability to the retention of the virtual object.

Also, the data collected is used to continue helping us develop a fully-fledged personalized assistant for each user. By the way, we use machine learning and neural networks to analyze and train data from each user’s drawing. We will tell you about this in more detail in the following articles.

Houston, we have a problem or the working principle — ‘have no fear.’

To understand how the problem was solved, it is necessary to go back to the beginning:

Environment. Every surface has a different texture, color, and structure. Also, algorithms can be distracted by extraneous ‘noises’ of the environment. Filters solve this problem (‘Channels’ the same as found in channels in Photoshop).

Unification of surfaces is difficult, if, of course, a neural networks’ training is not based on real data from users. The training of a neural network is an ongoing process and should therefore not ever be thought of as a completed part of the product.

‘Canvas’. A canvas is a sheet of paper, a wall, or any other surface which you might choose to draw on. The variety of ‘canvases’ is determined only by the dimensions. At the same time, white is the ‘enemy’ for computer vision. Please, read our note ‘The White Paper Problem’ to understand why this is happening.

Drawing. Obviously, we know our user’s skills level and don’t expect a sketch to be a perfect match with the original image. So, our algorithm takes into account user inconsistencies.

Hands. Another ‘enemy’ for the successful working of the app is when the hand on the screen crosses a surface, markers or the drawing itself. The size and shape, skin color and even style of holding a pencil divert part of the attention of the algorithm.

Device. The non-fixed position of the device. Users hold the phone with one hand, approaching and moving away from the surface at different angles.

Solving.

After a detailed analysis of these problems, we understood how to ‘teach’ the app to see only what the user draws, filtering, but not ignoring the environment.

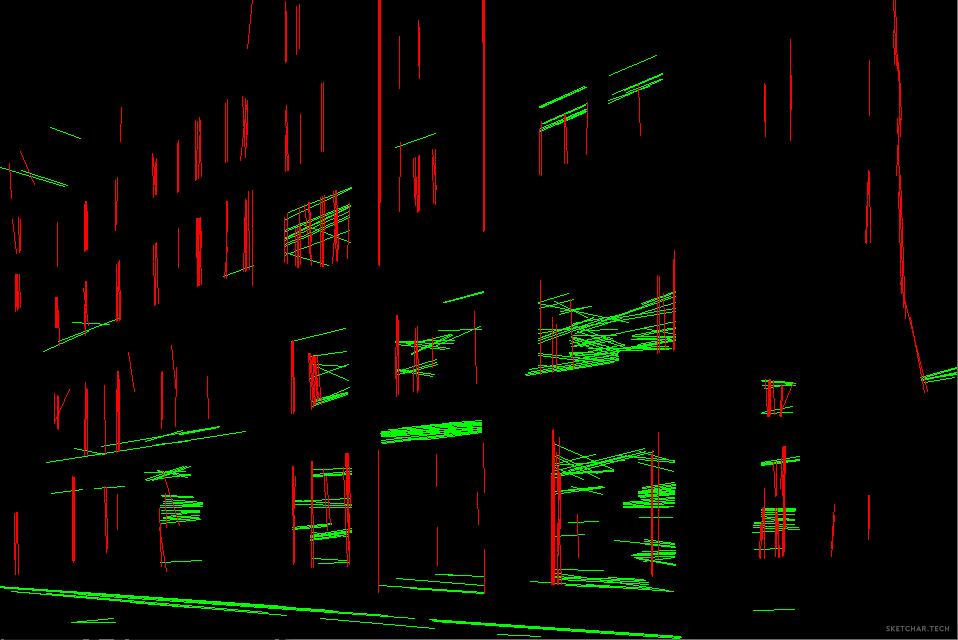

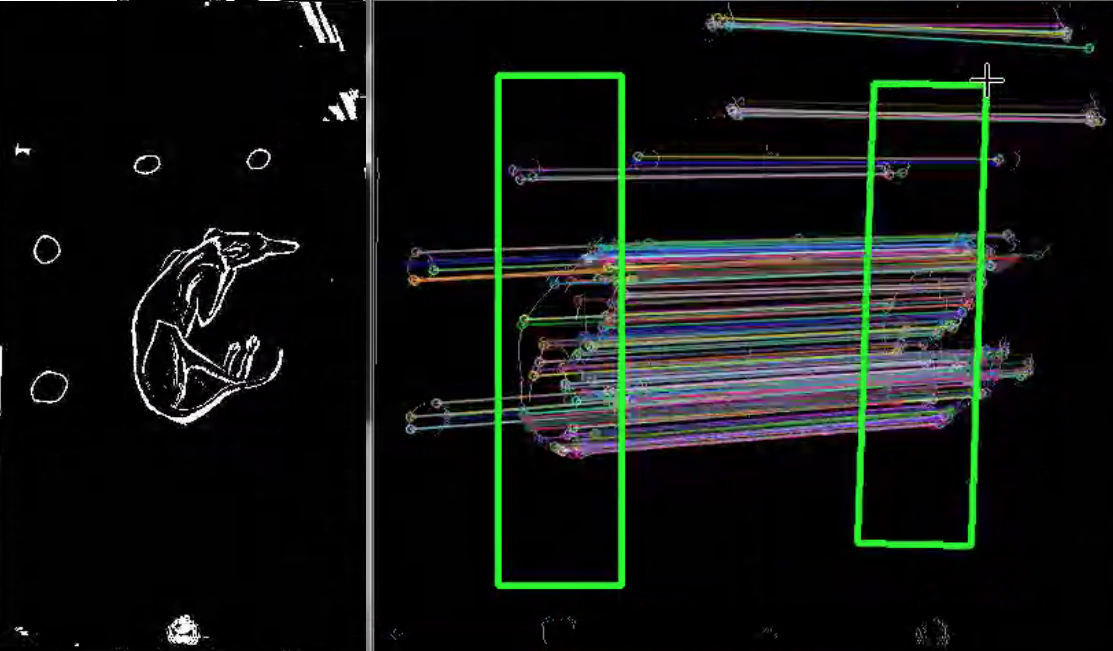

Below you can see how the surrounding world is transformed into a flat image and is divided into layers to analyze each of them through channels.

How does it work?

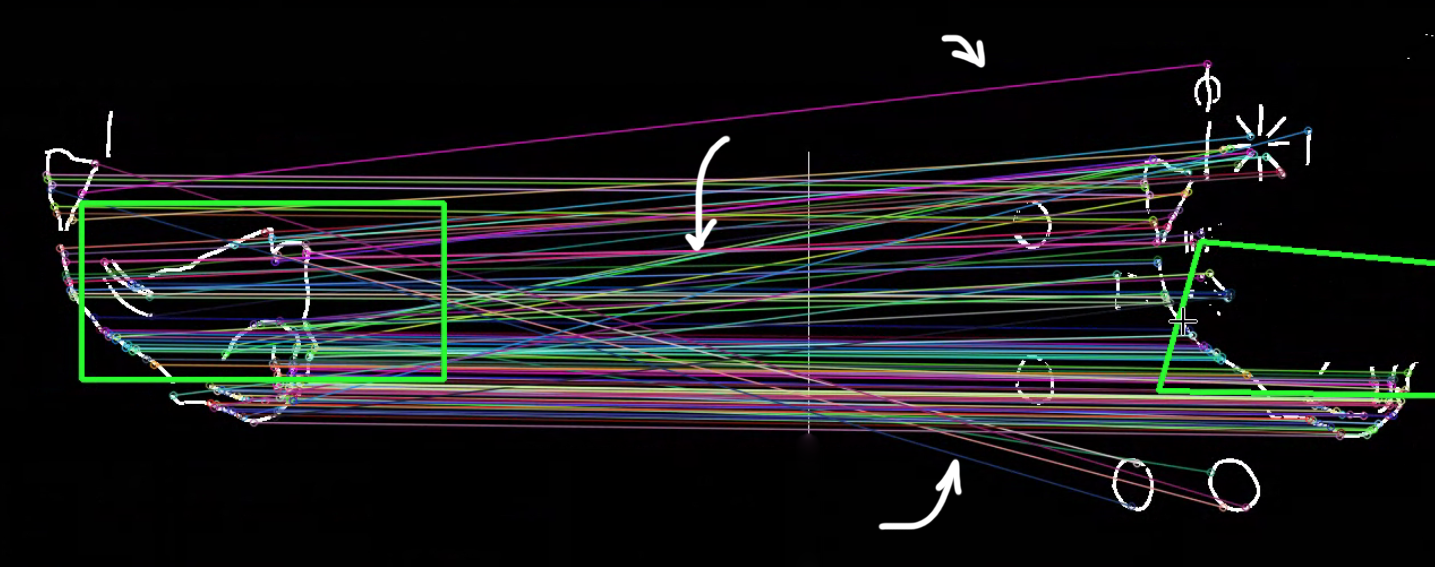

Converting the rendered content, mapping it to the original, and then placing a virtual object on the surface, requires a multifaceted approach. Actually, some of these layers are much more significant than others. It is clear when we look at just a small part of the process how many actions occur every second.

This is a simple scheme of how the environment becomes a flat image. Then it is separated into many layers (channels) to be analyzed.

As described above, the layers are separated, and an algorithm teaches the camera to distinguish between everything it sees. For instance, the background is separated from the hand, the hand from its shadow, etc.

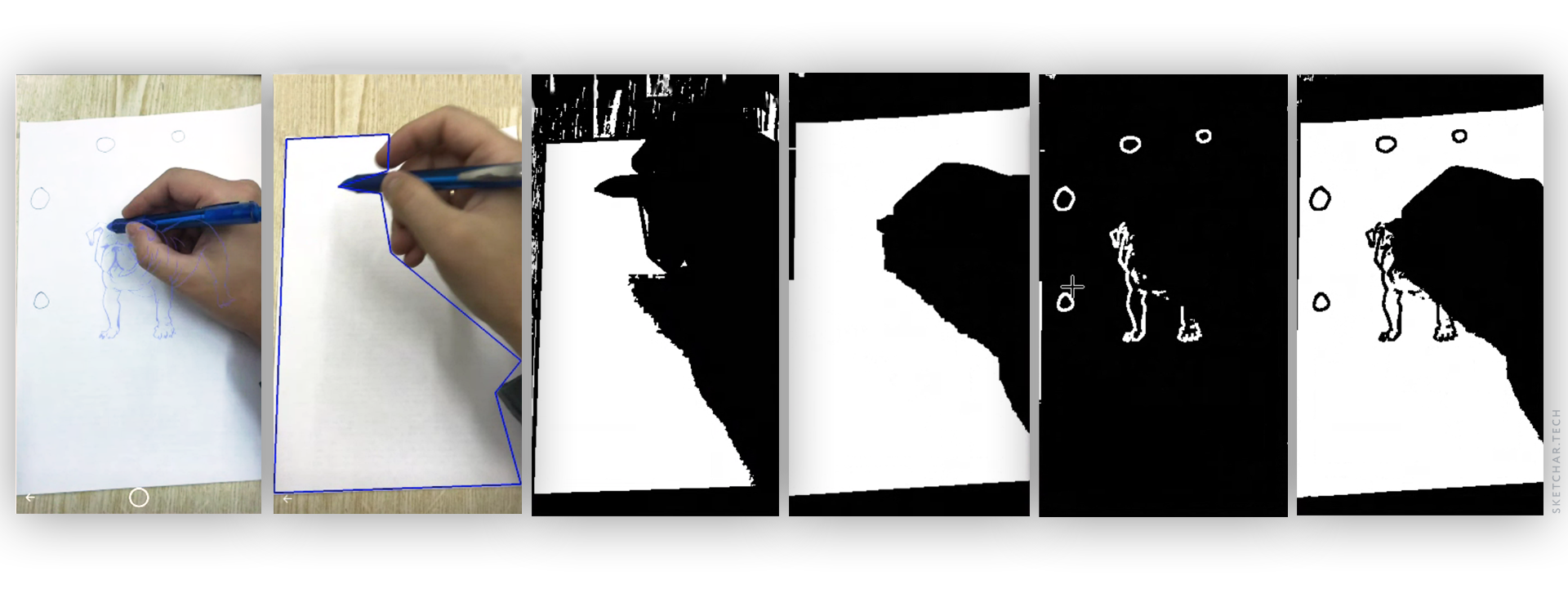

Screens are in order from left to right:

1. The virtual image located on the surface, visible to the user on the smartphone screen.

2. Recognition of the sheet on the surface

3. The paper is roughly separated from the background. The noise on the surface of the table is visible, and the shadow from the hand is recognized as one object.

4. A clear separation of the hand from the surface.

5. Detection of only the drawn content.

6. Recognition of the drawn content relative to the environment.

When the app knows how to recognize each of the objects clearly, it matches them with the original images. Remember, each action of the algorithm happens when the camera and hands are continuously moving in the frame.

Without going into details, this approach solves many of the product’s problems and tasks to improve the user experience. It expands the scope for applying the data obtained, thus allowing us to take the product to new levels. It is a full-fledged assistant, backed up by data from machine learning and neural networks.

SketchAR has been available in AppStore for almost a year, in Play Market for half a year, and even on MS Hololens. Despite the enthusiastic reviews from users and from press, there is still no rival application with a similar functionality. This emphasizes the problem complexity and originality of our algorithms.

Follow the updates, draw more and love technology, because it helps us to grow… and soon it will enslave humanity! ;)

Art for all!